wotw, gut punch

means something knocks the wind out of you literally a punch to the gut. means to be suddenly be emotionally wound up, because of something you see or hear.

like I am about to do to you …

wotw, gut punch

means something knocks the wind out of you literally a punch to the gut. means to be suddenly be emotionally wound up, because of something you see or hear.

like I am about to do to you …

Here we will get to know about the If-else, control flow.

As the name says (if) it simply applies the condition applied to it. If the value of the expression is true, the command works according to it.

#!usr/bin/env/ python 3

number= int(input("Enter a number:"))

if number<100

print("the number is less than 100")

(if)statement is not fulfilled.

#!usr/bin/env python3

number= int(input("Enter a number:")

if number<100

print("The number is less than 100");

else

print("The number is greater than 100");

cse@cse-Vostro-3470:~$ cat employee

eid ename desg dependent age salary

001 Arun Engineer Master 30 35000

010 Mrinal Engineering Admin 21 45000

101 Ajay Civil Assist superMembeer 22 54010

101 rabeen developer R&d 25 9000

001 Arun Engineer Master 30 35000

010 Mrinal Engineering Admin 21 45000

01 Ajay Civil Assist superMembeer 22 54010

101 rabeen developer R&d 25 9000

001 Arun Engineer Master 30 35000

cse@cse-Vostro-3470:~$ sort -n employee eid ename desg dependent age salary 001 Arun Engineer Master 30 35000 001 Arun Engineer Master 30 35000 001 Arun Engineer Master 30 35000 01 Ajay Civil Assist superMembeer 22 54010 010 Mrinal Engineering Admin 21 45000 010 Mrinal Engineering Admin 21 45000 101 Ajay Civil Assist superMembeer 22 54010 101 rabeen developer R&d 25 9000 101 rabeen developer R&d 25 9000

cse@cse-Vostro-3470:~$ sort -nr employee

101 rabeen developer R&d 25 9000

101 rabeen developer R&d 25 9000

101 Ajay Civil Assist superMembeer 22 54010

010 Mrinal Engineering Admin 21 45000

010 Mrinal Engineering Admin 21 45000

01 Ajay Civil Assist superMembeer 22 54010

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

eid ename desg dependent age salary

cse@cse-Vostro-3470:~$ sort -u employee

001 Arun Engineer Master 30 35000

010 Mrinal Engineering Admin 21 45000

01 Ajay Civil Assist superMembeer 22 54010

101 Ajay Civil Assist superMembeer 22 54010

101 rabeen developer R&d 25 9000

101 rabeen developer R&d 25 9000

eid ename desg dependent age salary

cse@cse-Vostro-3470:~$ sort -ur employee

eid ename desg dependent age salary

101 rabeen developer R&d 25 9000

101 rabeen developer R&d 25 9000

101 Ajay Civil Assist superMembeer 22 54010

01 Ajay Civil Assist superMembeer 22 54010

010 Mrinal Engineering Admin 21 45000

001 Arun Engineer Master 30 35000

-t in sort can be used as specifying delimiter sort -t” “ +4 -5n employee : to sort according to particular column

cse@cse-Vostro-3470:~$ sort -t” “ +4 -5n employee

01 Ajay Civil Assist superMembeer 22 54010

101 Ajay Civil Assist superMembeer 22 54010

eid ename desg dependent age salary

010 Mrinal Engineering Admin 21 45000

010 Mrinal Engineering Admin 21 45000

101 rabeen developer R&d 25 9000

101 rabeen developer R&d 25 9000

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

cse@cse-Vostro-3470:~$ sort -k3 employee

01 Ajay Civil Assist superMembeer 22 54010

101 Ajay Civil Assist superMembeer 22 54010

eid ename desg dependent age salary

101 rabeen developer R&d 25 9000

101 rabeen developer R&d 25 9000

010 Mrinal Engineering Admin 21 45000

010 Mrinal Engineering Admin 21 45000

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

cse@cse-Vostro-3470:~$ sort -t” “ +4 -5n +1 -2r employee : Sorts 5th field first leaving till 4th field then again perform sort on the 2nd field on the sorted list.

eid ename desg dependent age salary

01 Ajay Civil Assist superMembeer 22 54010

101 Ajay Civil Assist superMembeer 22 54010

010 Mrinal Engineering Admin 21 45000

010 Mrinal Engineering Admin 21 45000

101 rabeen developer R&d 25 9000

101 rabeen developer R&d 25 9000

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

001 Arun Engineer Master 30 35000

cse@cse-Vostro-3470:~$ sort -k2,4r student 1si17cs097 Sarthak 5 S+ 1si17cs071 Nitish 5 B USN NAME SEM GRADE 1si17cs062 Mrinal 5 S+ 1si17cs061 Mrigank 4 S 1si17cs058 Mihir 3 S+ 1si17cs052 Kshtiz 1 C

grep "unix" filename.txt : prints only line containing unix also higlights the “unix ” wherever it is present.

unix is great os. unix is opensource. unix is free os.

unix linux which one you choose.

unix is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

grep -n "unix" filename.txt : prints only line containing unix and also gives the no. line.

1:unix is great os. unix is opensource. unix is free os.

3:unix linux which one you choose.

4:unix is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

$grep "[2-3][0-9]" emp : Check for the string from 20 to 39

TCS101 Anu Engineer Manufacturing 30 35000

INFY02 Sona Developer Development 25 29000

TCS102 Raki Accountant Admin 31 32000

INFY03 Arun Engineer Manufacturing 28 34000

INFY04 Amit Accountant Admin 24 27000

TCS103 Nanda Engineer Manufacturing 29 37000

TCS104 Swathi Developer Development 28 29000

$ grep -v "[2-3][0-9]" emp :Invert the sense of matching, to select non-matching lines.

$ grep -n "[2-3][0-9]" emp : Displays in ordered list.(i.e. with line number) with string matching from 20 to 39.

1:TCS101 Anu Engineer Manufacturing 30 35000

2:INFY02 Sona Developer Development 25 29000

3:TCS102 Raki Accountant Admin 31 32000

4:INFY03 Arun Engineer Manufacturing 28 34000

5:INFY04 Amit Accountant Admin 24 27000

6:TCS103 Nanda Engineer Manufacturing 29 37000

7:TCS104 Swathi Developer Development 28 29000

$ grep -n "TCS[0-3][0-9][0-9]" emp : Displays only TCS employee details with line number present in the original file ranging from TCS000 to TCS399.

1:TCS101 Anu Engineer Manufacturing 30 35000

3:TCS102 Raki Accountant Admin 31 32000

6:TCS103 Nanda Engineer Manufacturing 29 37000

7:TCS104 Swathi Developer Development 28 29000

*NOTE : emp and student are the file names.*

unix is great os. unix is opensource. unix is free os. learn operating system. unix linux which one you choose. unix is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

sed -e 's/unix/UnIx/g' filename.txt : Searches for unix and replaces it with UnIx everywhere. Here g stands for making changes globally.

UnIx is great os. UnIx is opensource. UnIx is free os.

learn operating system.

UnIx linux which one you choose.

UnIx is easy to learn.UnIx is a multiuser os.Learn UnIx .UnIx is a powerful.

linux is easy to learn.linux is a multiuser os.Learn linux .linux is a powerful.

sed 's/unix/UnIx/1g' filename.txt : Replaces with Unix globally from the first occurrence in a line.

UnIx is great os. UnIx is opensource. UnIx is free os.

learn operating system.

UnIx linux which one you choose.

UnIx is easy to learn.UnIx is a multiuser os.Learn UnIx .UnIx is a powerful.

linux is easy to learn.linux is a multiuser os.Learn linux .linux is a powerful.

sed '3,5s/unix/UnIx/' filename.txt : It will replace the unix with UnIx from 3rd line till 5th line if present.

unix is great os. unix is opensource. unix is free os.

learn operating system.

UnIx linux which one you choose.

UnIx is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

linux is easy to learn.linux is a multiuser os.Learn linux .linux is a powerful.

sed '1,2s/unix/UnIx/' filename.txt: It will replace the unix with UnIx from 1st line and 2nd line.

UnIx is great os. unix is opensource. unix is free os.

learn operating system.

unix linux which one you choose.

unix is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

linux is easy to learn.linux is a multiuser os.Learn linux .linux is a powerful.

sed '1,$s/unix/UnIx/' filename.txt : It will replace the unix with UnIx from 1st line till the last line. Here “$” indicates end line.

UnIx is great os. unix is opensource. unix is free os.

learn operating system.

UnIx linux which one you choose.

UnIx is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

linux is easy to learn.linux is a multiuser os.Learn linux .linux is a powerful.

sed '/unix/ a "Add a new line"' filename.txt : It adds “Add a new line” at the end of the line in which unix occurred.

unix is great os. unix is opensource. unix is free os.

“Add a new line”

learn operating system.

unix linux which one you choose.

“Add a new line”

unix is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

“Add a new line”

linux is easy to learn.linux is a multiuser os.Learn linux .linux is a powerful.

sed '/unix/ i "Add a new line"' filename.txt : i means insert the “Add new line” before the occurence of unix

“Add a new line”

unix is great os. unix is opensource. unix is free os.

learn operating system.

“Add a new line”

unix linux which one you choose.

“Add a new line”

unix is easy to learn.unix is a multiuser os.Learn unix .unix is a powerful.

linux is easy to learn.linux is a multiuser os.Learn linux .linux is a powerful.

sed '/unix/ c "Add a new line"' filename.txt : replace the line containing unix with the content “Add new line”

“Add a new line”

learn operating system.

“Add a new line”

“Add a new line”

In Python there is no need to import any external libraries for file handling as it comes with an inbuilt library There are 3 main operations associated with the file. 1. Creating/Opening a file. 2. Writing into the file/ Reading from the file. 3. Closing a file.

Syntax :

filePtr = open("your_file_name.txt", "mode")

Following are the modes supported in python:

1. “w”, “r”, “a”: To open files in write mode, read mode and append mode respectively.

ex:

filePtr = open("my_file.txt", "w")

It will create a new file if the given file name doesn't exist else it will overwrite the contents of the given file.

filePtr = open("my_file.txt", "r")

It will open a file only if it exists in the reading mode. i.e. we cannot edit the contents of the file.

filePtr = open("my_file.txt" , "a")

It will append the contents of the file if already exists else it will create a new one.

filePtr = open("my_file.txt", "w+")

It will create a new file if the given file name doesn't exist else it will overwrite the contents of the given file. In this mode, we can also read the content of the file at the same time.

filePtr = open("my_file.txt", "r+")

It will open a file only if it exists in the reading mode also we can edit the contents of the file unlike in “r” mode.

** Note: +r differs from +w mode as in +r mode it doesn't delete the content also it doesn't create a new file if doesn't exist.**

filePtr = open("my_file.txt" , "a+")

It will allow simultaneous read and append operation on the file.

filePtr = open("my_file.txt", "wb")

It will create a new file if the given file name doesn't exist else it will overwrite the contents of the given file.filePtr = open("my_file.txt", "rb")

It will open a file only if it exists in the reading mode. i.e. we cannot edit the contents of the file.

filePtr = open("my_file.txt" , "ab")

It will append the contents of the file if already exists else it will create a new one.

filePtr = open("my_file.txt", "wb+")

It will create a new file if the given file name doesn't exist else it will overwrite the contents of the given file. In this mode, we can also read the content of the file at the same time.

filePtr = open("my_file.txt", "rb+")

It will open a file only if it exists in the reading mode also we can edit the contents of the file unlike in “r” mode.

filePtr = open("my_file.txt" , "ab+")

It will allow simultaneous read and append operation on the file.

We can write in a file using write() function

Syntax :

filePtr.write("THIS is a file CONTENT")

We can read from the file using read() and readline() function

ex:

content = filePtr.read(): This return the entire content of the file.

content = filePtr.readline(): This returns only the content of one line in a file.

Closing a file is very important as if it exceeds the top limit it can lead to the crashing of the program. So it is advisable to close a file.

Syntax :

filePtr.close()

This is the second part of the series, in the first part we talked about the general idea of concurrency, how it's different from parallelism and saw how Python handles concurrency.

Part 1: Talking Concurrency -1

In the second part of the blog, we will look into the modern solution towards the problem using the new Asyncio module.

In the last post, we looked into a basic code snippet on how can we write concurrently. We also discussed some of the basic terminology used while using the Asyncio module. If you don't remember you should quickly take a recap as we would look at those concepts in a bit detailed manner.

Before looking at some code, let's understand some basic terminologies that would help in understanding the code better.

Eventloop: it's an infinite loop that keeps track of all the running tasks. It manages all the suspended functions and executes them when the time is right. These functions are stored in the queue called as the Task Queue, the event loop constantly polls the task queue and passes them to the event loop. When a task is passed on to the event loop it returns back a future object.

Future: a future is an indirect reference to a forthcoming result. It can loosely be translated as promise you make to do something when a condition is met, so when the condition is met a future can “callback” when ready to be executed. Since everything is an object in python, future is also an object that has the __await__() method implemented and its job is to hold a certain state and result. The state can be one of three things:

Pending: it does not have a result or exception yet. Cancelled: it was canceled Finished: it was finished either with a result or exception.

Futures also have a method called the add_done_callback() this is method allows the function to be called as soon as the task is completed with its process and is returned with a result. Which is the python object that would be returned with the expected result or raise an exception when the task is finished.

asyncio.create_task(coroutine) wraps the coroutine into a task and schedules its execution. asyncio.create_task(coroutine) returns a task object. Every time a coroutine is awaited for a future, the future is sent back to the task and binds itself to the future by calling the add_done_callback() on the future. From now on if the state of the future changes from either canceled or finished, while raising an exception or by passing the result as a python object. The task will be called and it will rise back up to its existence.Since a typical program will have multiple tasks to be executed concurrently, we create normally with asyncio.create_task(coroutine) but we run them with asyncio.gather().

@asyncio.coroutine which used a yield from keyword. Later in Python 3.5 async and await keywords were introduced which made working/reading concurrent code much easier. I won't go into much detailed on how coroutines evolved to the new async def keyword, because I planning to write a separate blog on that.As we looked into the basic definition of coroutines in the last blog, we can loosely describe them as restartable functions.

You make a coroutine with the help of the async def keyword and you can suspend the coroutine with the await keyword. Every time when you await the function gets suspended while whatever you asked to wait on happens, and then when it's finished, the event loop will wake the function up again and resume it from the await call, passing any result out. Since coroutines evolved from generators and generators are iterators with __iter__() method, coroutines also have __await__() which allows them to continue every time await is called.

At each step a coroutine does three things:

Before moving forward, I want to talk about await. In Python, anything that can be awaited i.e used with the await keyword is called an awaitable object. The most common awaitable that you would use would be coroutines, futures and tasks. Thus anything is blocking get's put to the event loop using the await and added to the list of paused coroutines.

Now let's look at a very basic async program to understand how everything fits in together.

import asyncio

import asyncio

async def compute(x, y):

print("Compute %s + %s ..." % (x, y))

await asyncio.sleep(1.0)

return x + y

async def print_sum(x, y):

result = await compute(x, y)

print("%s + %s = %s" % (x, y, result))

asyncio.run(print_sum())

The sequence diagram below describes the flow of the above program.

Now that we know all the basic terminology used in an async program let's look at a slightly complex code below for getting a better understanding all the jargons we learned above.

import asyncio

async def compute(x, y):

"""

A coroutine that takes in two values and returns the sum.

"""

print(f"Computing the value of {x} and {y}")

await asyncio.sleep(1)

return x + y

async def print_sum():

"""

A coroutine that creates tasks.

"""

value1 = asyncio.create_task(compute(1, 0))

value2 = asyncio.create_task(compute(1, 0))

value3 = asyncio.create_task(compute(1, 0))

print(sum(await asyncio.gather(value1, value2, value3)))

asyncio.run(print_sum())

async def print_sum() and async def compute() are the two coroutines in the above program, the async def print_sum() as the main function used in the sync programming. The main function executes the entire program and all the functions related to it. The same approach is followed here, one coroutine awaits all the other coroutine.

Though this can be easily miss-understood, in that case, the program would just fine but would run in more like a sequential manner.

value1 = await asyncio.create_task(compute(1, 0))

value2 = await asyncio.create_task(compute(1, 0))

value3 = await asyncio.create_task(compute(1, 0))

print(sum(value1, value2, value3))

The above code can be a good example of how not to write async code, here using await on every task we are making all the calls sync thus making the program sequential. To avoid this asyncio.gather() is used in the program. To gather all the tasks in the program, value1, value2 and value3.

Finally, when all the tasks are gathered together, they are run concurrently.

A lot of time you might be in a situation where you might have to call a sync function def from coroutine async def or have to call coroutine async def from sync function def. Ideally, you “shouldn't” use sync functions for calls that can be async like a database call because that is something that could provide further optimization. But there is nothing wrong with using a synchronous library for database, an async library for HTTP and gradually move things to async.

Calling a sync function def from a coroutine async def. In that case, you run the sync function in a different thread using the threadpool executor. The runinexecutor() method of the event loop takes an executor instance, a regular callable to invoke, and any arguments to be passed to the callable. It returns a Future that can be used to wait for the function to finish its work and return something.

import asyncio

import concurrent.futures

def blocking_io():

# File operations (such as logging) can block the

# event loop: run them in a thread pool.

with open('/dev/urandom', 'rb') as f:

return f.read(100)

def cpu_bound():

# CPU-bound operations will block the event loop:

# in general it is preferable to run them in a

# process pool.

return sum(i * i for i in range(10 ** 7))

async def main():

loop = asyncio.get_running_loop()

## Options:

# 1. Run in the default loop's executor:

result = await loop.run_in_executor(

None, blocking_io)

print('default thread pool', result)

# 2. Run in a custom thread pool:

with concurrent.futures.ThreadPoolExecutor() as pool:

result = await loop.run_in_executor(

pool, blocking_io)

print('custom thread pool', result)

# 3. Run in a custom process pool:

with concurrent.futures.ProcessPoolExecutor() as pool:

result = await loop.run_in_executor(

pool, cpu_bound)

print('custom process pool', result)

asyncio.run(main())

When you have to call coroutines from the normal sync function. You just have to manually get_event_loo() , create tasks() and call the asyncio.gather() function. Since you can await, one thing you can do is create a queue with asyncio.queue() and use that queue to pass around the data between different coroutines.

import asyncio

async def compute(x, y, data):

print(f"Computing the value of {x} and {y}")

result = x + y

await data.put(result)

async def process(n, data):

processed, sumx = 0, 0

while processed < n:

item = await data.get()

print(item)

processed += 1

value = item

sumx += value

print(f"The sum is:{sumx}")

await asyncio.sleep(.5)

def main():

loop = asyncio.get_event_loop()

data = asyncio.Queue()

sum1 = loop.create_task(compute(1, 4, data))

sum2 = loop.create_task(compute(0, 0, data))

sum3 = loop.create_task(process(2, data))

final_task = asyncio.gather(sum1, sum2, sum3)

loop.run_until_complete(final_task)

if __name__ == '__main__':

main()

Write a program that reads log files and refires those URLs that have a 5xx status code. Once the refiring is done just add the &retry=True in the prefix of the URL and store them in a separate log file.

The log file will be a text file, you can check out a sample file here.

Just before ending the blog I would like to thank maxking and Jason Braganza for helping me out in the blog.

In the next part of the series, I will be talking about threads and finally will conclude the series with asyncio based frameworks such as quart and aiohttp.

Happy Coding!

wotw, antifragile

to be someone, who is more than resilient. to be someone, who thrives when the world breaks you!

to learn how, spend an hour and watch the videos below.

The above quote clearly replicates confidence and self-love. We somehow get affected by the negative comments and judgments passing by and try to change ourself so that people would accept us the way we are. Finally, when we arrange ourselves for the people to accept us there is again a judgment passing by and yet again we try to change ourselves. In the end, we forget the real us and be a toy for people's satisfaction. as we grow old we realize that we have left the true selves way back and been another person. Fat-shaming or Body shaming, being a dark-skinned girl or being extra bold even wearing a dark lipstick at daytime, or wearing clothes of your own choice attracts people's attention more than taking care of social and devastating issues happening day-to-day. Have you ever heard someone saying that look at the person who threw the packets on the side of a road or look at the person who is pissing at a public place, why aren't they ashamed? Yes, I am healthy and have a unique body shape that maybe most girls don't have or most guys make fun of it. So what? yes I am like this and I am proud of it but you will ignore it once or twice or maybe thrice. Ignorance is not a solution the mentality should be changed, and it can only change if people start teaching their children the value of respect and acceptance, acceptance of people as they are. That obviously doesn't include the acceptance of wrongdoings and violent nature of some people, but accepting the things to make this a society and a better place to live. Only then we can stand together and build a healthy community.

wotw, myth

the stories we tell ourselves as a culture, to explain what we could not really explain.

to help use make sense of the world, so that we’d not fuss over the unexplained and let us get on with our lives and make progress with the things we do understand.

After so many days I am continuing with Python again. Yes, I was not able to maintain the series properly, but let's get back to work and know what I read next in Python from the book of PYM. In Python most of the lines will have expressions and these expressions are made of operators and operands.

These are the symbols which tells the python interpretor to do the mathematical operation.

2+3

5

22.0/12

1.83333

To get floating results we need to use the division using any of operand as the floating number. To do modular operation use % operator.

#!/usr/bin/envv python3

days= int(input("Enter days:"))

month= days/30

days= days%30

print("Months= %d days= %d %(month, days))

< is less than > is greater than <= is less than or equal to >= is greater than or equal == is equal to != is not equal to

Note: // operator gives floor division result.

4.0//3

1.0

4.0/3

1.33333

To do a logical AND, OR we use these keywords.

One month ago I attended DevConf India 2019. It was held from August 2-3 at Christ University, Bangalore. It was quite a while since I attended a conference, the last one being PyCon India 2018 . Due to my laziness in writing I have altogether missed writing my Conference experiences till now. This will be in fact my first Conference blog post. Now on I will make sure to write about each of the conferences I go.

I came to know about DevConf India last year when it was first held, from people in my developer circle. DevConf India is organised by Red Hat and has similar events in US and Czech Republic. Since I was in Bangalore this year I made sure I attend it after I saw the dates in Twitter. I registered as soon as I came to know about it.

I mostly planned on meeting up with people and attend a few talks. I started at 8.30 am from my place and unfortunately missed the Keynote owing to a bad experience with a Bike Taxi service. I reached the Venue around 10 am and collected my Attendee badge and T-shirt. Then I headed towards the Keynote Session Hall where I met Naren from Chennaipy. I earlier met him at PyCon India 2018. It was nice catching up with him.

After having breakfast at the venue I headed to the Booth area where I met Chandan . I started visiting the booths asking questions about various projects like Fedora, Debian, CentOS. Shortly after I met up with some more familiar faces from Dgplug – Sayan, Rayan , all of them I met during PyCon India last year. I expected a Dgplug staircase meeting at DevConf but unlike last year there were less attendees this time. After that we went for lunch at the Cafeteria where I met Ramki , Praveen and pjp . Few days earlier I was reading pjp’s tutorial regarding gcc and gdb from dpglug irc logs . It was nice to catch up with him in person. I was discussing with Sayan and Praveen about the initial days of dgplug at my college at NIT Durgapur, attending their first talk in 2013 when I had just joined my college.

After Lunch I decided to attend few talks. I attended a talk regarding Evolution of Containers – there I came across terms like Chroot, Cgroups, Namespaces , how the whole container ecosystem was born. I have been always been inquisitive about containers and though I haven’t really worked on containers before this talk really fascinated me to dive into the world of containers.

Then I attended a talk on What hurts people in Open Source Community . The talk helped to set my expectations right regarding contribution to a Open source project and Community.

After that I went to the Closing Keynote of the day shortly after which we went for evening snacks where we had more discussions over Coffee and Dosa – we noticed a item mentioning ‘Open Dosa’ over which we laughed a lot  . And it was finally close of the day.

. And it was finally close of the day.

I reached a little late to the venue and went straight to the talk that I didn’t want to miss. It was a Documentation BoF where speakers were discussing how to create effective documentation and tools for creating collaborative documentation. I came across User Story based documentation and tools of the trade like asciidoc and asciidoctor . I met Ladar Levison there during that session and talked with him regarding better project organisation. He gave me his business Card which mentioned Lavabit . Little did I know about him until I read this article which explained more about Lavabit and his role in Snowden’s secure email communication. But that was after this conference and I wished I could talk more about Privacy and Lavabit projects.

After that I went for lunch with Sayan, Chandan and Rayan where we chatted on lot of different stuff on open source, food and conferences. After Lunch I went to attend Sinny‘s talk on Fedora CoreOS whom Sayan introduced last day.

Finally it was nearing the end of the day. I went to attend the closing keynote by Jered Floyd and sat beside Christian Heimes from Red Hat who was sharing anecdotes from his travel experiences.

I made few notes that I would like to share from my experience at the Conference and also as a note to me for future Conferences

And yes don’t forget to take pictures  It really bring memories. It may sound weird but this is something I really forget every time I meet up with people and later wait for Conference photos.

It really bring memories. It may sound weird but this is something I really forget every time I meet up with people and later wait for Conference photos.

Two and a half months earlier I shifted to Bangalore after a 2 years stint at my first Company at Hyderabad. I was looking for new opportunities and started appearing in interviews when I hit the hard realisation that what I learned till now was not enough. I need to self-learn more and interact more with people and learn from stories that people experienced. I landed up a job at a EDA based firm at Bangalore and decided to move in there.

Time really flies and it had been already 2 years working in the Software Industry. I met with a lot of people, made friends and shared some good experiences. I became aware of the meetup culture, realised writing code is not the only way that makes you a good developer, realised that communication is a key factor in conveying your ideas.

I became part of the HydPy Community and organised 2 conferences – PyConf Hyderabad 2017 and PyCon India 2018 . I met some like minded people passionate about technology and flourishing the Python Community at Hyderabad. I still am a part of the Community and hope to continue doing so.

I also attended the dgplug Summer Training in 2018, made many friends there as well whom I keep meeting during conferences. They are a really amazing community and there is always something to learn from each of the IRC conversations.

I interacted with a lot of people from Python and open source Community. Going to Conferences and meetup is really a good way to interact with some awesome people and sharing their knowledge. But it’s also true that you really need to work on something tangible and develop your skills. Then only you can move forward and also put the experiences to use.

I have been living in the heart of the fast growing city at Hyderabad. It’s been a nice experience here as a whole (except from traffic on rainy days!). I explored to places around in the initial days. Some significant events happened during my stay – the GES 2018 was held, Hyderabad metro rail was inaugurated, the first IKEA store in India opened up . I Spent my first Durga puja away from home. Realised that Kolkata Biryani is still better than Hyderabadi Biryani. Saw more buildings, Tech parks and flyovers being constructed in Rapid phase. Got to experience the hot summer and pleasant winters. All together it had been a good time in the city of Nizams. Hope to come again here someday !

It’s been 2.5 months while I came here at Bangalore. It’s called the Garden City of India because of the amount of greenery it has. But I would rather call it a City of Traffic ! Dealing with traffic can be terrifying here. Nevertheless there is a lot of greenery left and I see palm trees here and there quite often. The area I live is booming with an array of multinational companies, I also observed more number of semiconductor based companies. Rightly calling it “Silicon Valley of India”. It’s pretty early to state my experience in this city so maybe I will write about it in the next phase

So what next ? I want to make my time here more productive and also develop some good habits that I have been lingering about. Get into the habit of writing, take time out for more self-learning, contribute to open source more often, interact with more people, put the experiences to use as much as I can, get more exercise and cut laziness. In fact I got me a cycle and have been doing my daily commute with it! I know now it’s more talk than work now. But I want this blog post as a reminder so that whenever I wander off I can come to this page and find what I need to do! And keep myself prepared for the next phase.

Chromium Embedded Framework (CEF) is a framework for embedding Chromium-based browsers in other applications. Chromium itself isn’t a extensible library. It helps you to embed a chromium browser in a native desktop application. The project was started by Marshall Greenblatt in 2009 as an open source project and since then it has been used by a number of popular applications like Spotify, Amazon Music, Unreal Engine, Adobe Acrobat, Steam and many more (full list can be found in this wiki). CEF is supported for Windows, Linux and macOS platforms.

There are 2 versions of CEF – CEF1 and CEF3. CEF1 is a single process implementation based in Chrome Webkit API. It’s no longer supported or maintained. CEF3 is a multiprocess implementation based on Chromium Content API and has performance similar to Google Chrome.

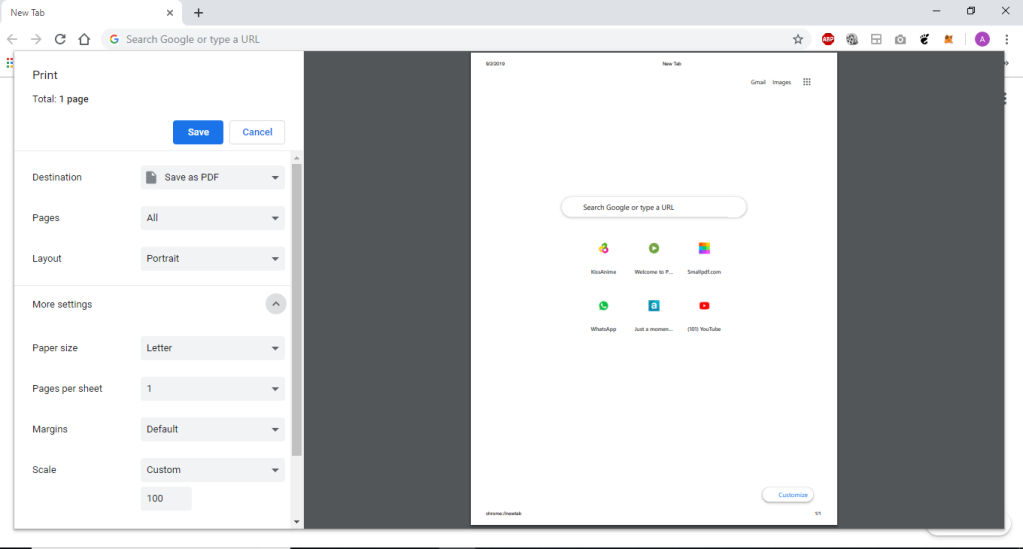

The purpose of writing this article is to document the work that I did while working on the CEF project. The major focus is on the Print Preview addition to CEF upstream project where I worked on.

For the past 1 year I have been working on CEF as a part of my day job. Initially the work was to maintain updates of CEF with every Chromium version released. We had a fork of the CEF open source project in which we applied some extra patches as per the requirements of the custom desktop application that used it. Building CEF was quite similar to building Chromium. It had a build script which was mostly used from downloading the code, building and packaging the binaries. Everything is documented here in this link . Upgrading the CEF was quite some task since building CEF took a lot of time and resources (a lot of CPU cores and a lot of Memory) and since CEF was based out of chromium I had to skim through parts of chromium code. Chromium has a nice developer documentation and a good code search engine that eased a lot of things. But owing to the fact that chromium has a huge codebase the documentation was outdated in few areas.

The interesting part of the CEF project came when I was handed over a work of a missing piece in CEF. Chromium supports in browser print preview where you can preview pages before printing, similar to one shown in the picture below.

CEF didn’t support this feature and had the legacy print menu where you cannot preview the pages to be printed.

This meant applications that used CEF couldn’t support print preview within them. The task was to make print preview available in CEF.

The work started with CEF version 3112 (supported chromium v60) and was in a working state in CEF 3239 (supported chromium v63) in our CEF fork. Then the change was supported only in Windows since our desktop application that used it was a Windows only application. I was handed over the work in CEF 3325 (supported chromium v65) where the following specs already existed in the Print Preview patch. The relevant blocks of code is available in the CEF source code now.

enable_service_discovery is disabled nowCefPrintViewManager::PrintPreviewNow() will handle print-previewCefPrintViewManager will not handle the print now. It handles the PrintToPdf function exposed through browserHost. Also, it listens to two print preview message PrintHostMsg_RequestPrintPreview, PrintHostMsg_ShowScriptedPrintPreview and generate the browserInfo for print preview dialogweb_modal::WebContentsModalDialogManagerDelegate and web_modal::WebContentsModalDialogHost required by the constrained window of print preview dialogGetDialogPosition() and GetMaximumDialogSize() used by browser platform_delegateProfile preferences required for printingConstrainerWindowsViewsClient class which is called in browser_main.ccCefPrintViewManager::InitializePrintPreview() initializes the print preview dialog which further calls PrintPreviewHelper::Initialize() which generates the browser_info required by print previewCefPrintViewManagerBase and its associated methods from CefPrintViewManager. Those methods are redundant after the print preview changesswitches::kDisablePrintPreview in CefPrintRenderFrameHelperDelegate::IsPrintPreviewEnabled() to determine whether print preview is enabledAfter I took over the CEF print preview work I did a number of changes to print preview, specs of which is documented below

print_preview_resources.pak in BUILD.gn to fix blank screen error which came from chromium v72 onwards because of updated print preview UIPrintPreviewEnabled() to extensions_utilswitches::kDisablePrintPreview to kSwitchNames in CefContentBrowserClient::AppendExtraCommandLineSwitches() to disable print preview on using --disable-print-preview command line switchprint_header_footer_1478_1565 chromium patch since it’s no longer required to disable print preview by defaultWebContentsDialogHelper() to wrap web_modal::WebContentsModalDialogManagerDelegate and web_modal::WebContentsModalDialogHost interface methods. Move it in a separate header and cc fileCefBrowserHostImpl::IsWindowless() methodis_windowless parameter in CefPrintRenderFrameHelperDelegate object from CefContentRendererClient::MaybeCreateBrowser() method in content_renderer_client.cc DownloadPrefs::FromBrowserContext for CEF use case. Add GetDownloadPrefs() to CefBrowserContext and use that to get the download_prefs in chromiumextra_info param to CreatePopupBrowserInfo()GetNativeView() method for MacOS platform delegate and update GetMaximumDialogSize() methodextensions::PrintPreviewEnabled()) since the print dialog crashes on printShowCefSaveAsDialog() and SaveAsDialogDismissed() to PdfPrinterHandler.Integrating print preview was a big and non-trivial change in CEF since not only it needed good understanding of the printing code in chromium but also the print preview feature was getting constant updates from Chromium. The code was constantly changing with every chromium version released and the print preview chromium documentation was outdated.

CEF3 has a multiprocess architecture similar to chromium’s documented here . There is a main browser process and multiple renderer processes. Debugging multiprocess applications can be trickier. I used Visual Studio which made things a bit easier as it has the Child process Debugging power tool which is a extension that would automatically attach Child processes and helped to debug into the child processes whenever they spawned up.

The chromium v72 version introduced a new print preview UI which broke the renderer, we got a blank screen in print previw. It took weeks to figure out what was wrong. Finally it came out that a pak file was missing which needed to be included in BUILD.gn. I had to spend multiple debugging session with my team to figure that out.

Also it had to be supported for all platforms (Windows, Linux, macOS) to qualify to be merged to the CEF upstream repo. Each platform had a different way of rendering dialogs. Though the windows support was working the Linux and MacOS weren’t supported in the changes yet. I added the support for Linux platform after building CEF in a linux VM. The MacOS support finally didn’t work out and we had to keep using legacy print for Mac platform. Though I needed to ensure the change built fine in Mac, so I had to build it for Mac as well (I was given a separate Mac machine just because of this since Mac doesn’t ship VM images) and in fact the change broke the MacOS build so the issues had to be fixed.

Even after all these changes the functionality broke after a architectural change was made in CEF in version 3770 (supported chromium v75) in this commit which rendered blank screen during print preview. Marshall took over the work from there and made a number of changes in the patch which can be seen in this PR chromiumembedded/cef#126 . The change was added manually in master revision 1669c0a on 20th July. It will be supported from the next CEF version (supported chromium v77). The current implementation supports print preview in Windows and Linux platforms via a --enable-print-preview flag.

Overall it has been a good experience working in the project and I got to know a lot about chromium itself. This was my first major contribution in a C++ based project. It helped me to understand how a browser works under the hood, how a browser renders web pages and processes javascript. I hope to carry forward this knowledge in some similar browser based project in future.

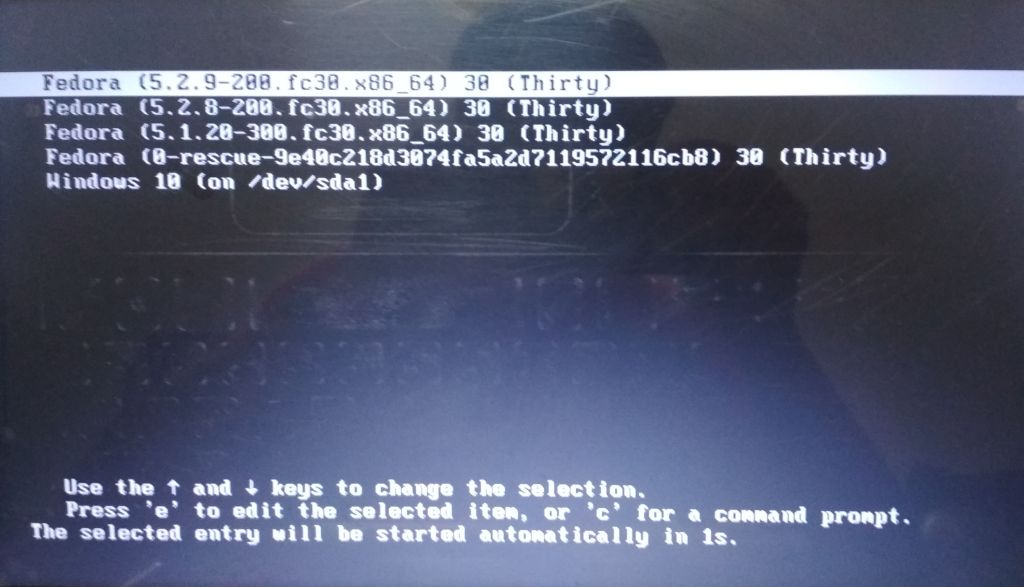

About 3 months back I installed the newly released Fedora 30 OS – dual boot with Windows 10 in my PC. This blog post comes from the notes I made during that time and as I troubleshooting note for future.

I had Fedora 29 and Windows 10 dual boot in my PC before that. The Fedora install partition was running out of space due to low disk space selected during my last install so I decided to do a clean reinstall this time. I made a live usb using the Fedora media writer for windows and the Fedora 30 iso available at getfedora download page. I followed the usual steps that I followed for installing earlier Linux installations in my PC, similar to what mentioned in this video.

The installation went fine and finally I was ready to boot from my hard drive. Then I saw what is called the Dreaded GRUB2 boot prompt.

error: unknown filesystem. Entering rescue mode... grub rescue>

I quickly started finding way to fix the grub. The first thing I found was the steps listed in this video. I had to choose the right partition from which the bootloader would load.

grub rescue> ls (hd0) (hd0,msdos4) (hd0,msdos3) (hd0,msdos2) (hd0,msdos1)

This shows the various partitions in my hard drive. One of this is the linux partition where my Fedora 30 OS is installed. I need to list all the partitions and one of them will have the linux filesystem.

grub rescue> ls (hd0, msdos4)/ bin/ boot/ dev/ etc/ home/ lib/ lib64/ lost+found/ media/ mnt/ opt/ proc/ root/ run/ sbin/ srv/ sys/ tmp/ usr/ var/

Now I ran the following commands and waited for the system to boot up

grub rescue> set prefix=(hd0, msdos4)/boot/grub2 grub rescue> insmod normal grub rescue> normal

I got the same grub rescue boot prompt but this time with a different error

error: file '/boot/grub2/i386-pc/normal.mod' not found. Entering rescue mode… grub rescue>

The issue was that, the i386-pc folder was missing in the /boot/grub2 folder. The fix that I found for the issue was related to grub2 not being properly installed at the boot location. Luckily I was able to boot Fedora from UEFI Boot option from the Boot Menu. I logged into Fedora and reinstalled grub2.

$ sudo grub2-install /dev/sda $ dnf install grub2-efi

I hoped that this would fix the issue, but it again came down to the same starting point loading the grub2 rescue prompt.

I further searched and landed up in the fedora grub2 manual . After reading it I realized there is something wrong in my grub2 configuration. I booted into my OS using UEFI boot and opened /boot/grub2/grub.cfg file. The entry for Windows was missing. I followed the steps given in this section. I went to the grub rescue prompt and fired the following commands

grub rescue> set root=(hd0, msdos4) grub rescue> linux /boot/vmlinuz-5.1.20-300.fc30.x86_64 root=/dev/sda4 grub rescue> initrd /boot/initramfs-5.1.20-300.fc30.x86_64.img grub rescue> boot

Then I recreated the grub.cfg file using these commands

$ grub2-mkconfig -o /boot/grub2/grub.cfg $ grub2-install --boot-directory=/boot /dev/sda

Voila ! I was able to see the grub menu with all the boot entries.

So why did the issue actually occur ? It didn’t happend in the past whenever I did a fresh installation nor it’s a issue specific to Fedora 30. I tried to dig the actual cause of the issue and after a little finding and introspection I came to this conclusion.

Using the Fedora media writer was in fact the place where I unknowingly did a mistake. I usually used UNetbootin for creating my linux live usb in past which made the images to boot in BIOS only mode. The Fedora media writer enables to boot in both UEFI and BIOS mode. My Windows installation boots via Legacy boot and it has always been like that and since I have been using UNetbootin earlier, it always picked up Boot via BIOS for the live images. This time while creating the Fedora 30 image using Fedora Media writer the default boot mode picked up was UEFI and that created a EFI GRUB2 configuration. Now when I booted the live usb I just booted from the “Boot from USB” option without noticing whether it was UEFI or BIOS and I went ahead and did Fedora 30 installation. Now my default boot option was Legacy Boot (since it supports the Windows boot) while the installed Fedora OS grub loader was created to boot in EFI mode. That in turn caused this problem due to a corrupted grub2 configuration.

Always be careful while created OS images. Check how it is supposed to boot. In case of dual boots all the OS must boot via the same mode – either both UEFI or both BIOS. So make sure when you are doing a clean install of the second OS it must boot via same mode as the already installed OS.

wotw, kind

i can afford to get preachy this week, because i turned a year older.

so, be kind!

to your parents,

your significant other,

your family,

your friends,

the folks around you,

to your dog and stray dogs and all the animals,

to whoever you can.

and most importantly

Be kind to yourself!

And while I think Bezos is a kook, his grandpa certainly was not.

My grandfather was a highly intelligent, quiet man. He had never said a harsh word to me, and maybe this was to be the first time? Or maybe he would ask that I get back in the car and apologize to my grandmother. I had no experience in this realm with my grandparents and no way to gauge what the consequences might be. We stopped beside the trailer. My grandfather looked at me, and after a bit of silence, he gently and calmly said, “Jeff, one day you’ll understand that it’s harder to be kind than clever.”

It’s harder to be kind than clever. So it’s no small thing I ask of you. Do a kind deed every day. Your life will be the better for it.

Today I tried to install UBUNTU 18.04 LTS into my friend's laptop. I will say it was quite a mixed experience. Below are the following steps I did for installing:

Click on windows icon type “Create and format Disc”. There you will see the following :

Meanwhile, download the ISO file for UBUNTU 18.04 (LTS bionic). Also, download Etcher for creating flash USB.

Give the path for downloaded iso file.

Go to the BIOS setup. For my dell Vostro laptop. I will press F12 just as the dell image logo is visible.

* Go to your USB drive

* Select “Open UBUNTU without installing”

* Go to your USB drive

* Select “Open UBUNTU without installing”

Select Normal Installation. Uncheck download updates while installing Ubuntu.

Now there are two steps 1. Easy Step 2. Creating our configuration

Now there are two steps 1. Easy Step 2. Creating our configuration

Select “Install Ubuntu alongside Windows Boot Manager” and goto Step 8

Select “Something Else“

Find where there is free space you have created.

Find where there is free space you have created.

Select and click on the “+” icon and we will allocate the space for “swap” and “home” and “root” directory.

Select logical memory and / signifies root, allocate 20 GB for it.

Select swap memory for 4GB (half the RAM size is preferred, for my Laptop of 8GB, it's 4096 MB).

Now give the rest of the space for the home.

Now select install now.

Click on continue. And wait for the installation to finish.

Yay! we are all done.

Click on continue. And wait for the installation to finish.

Yay! we are all done.

wotw, feminism

does not mean equality in the literal sense.

more for women to have the freedom, to be themselves.

the freedom, to work and play and live in peace.

means, a movement to get from under the thumb of millennia of social mores and things have always been this way, so suck it up.

means to actually live to the basic ideal of live and let live.

I am reading the book Linux for you and me, and some of the commands I got to know and it's work!! Gnome Terminal Here in this terminal, we write the commands.

For example: [darshna@localhost~]

Here Darshna is the username, localhost is the hostname and this symbol `#~ is the directory name.

Following some commands are: * date command= tells us about current time and date in IST(Indian standard time) * cal command= displays the default present calendar. * whoami command= tells which user account you are using in this system. * id command=displays real user id. * pwd comma= helps to find the absolute path of the current directory. * cd command= this command helps you to change your current directory.

Whenever we think of programs or algorithms we think of steps that are supposed to be done one after the other to achieve a particular goal. Let's take a very simple example of a function that is supposed to greet a person:

def greeter(name):

"""Greeting function"""

print(f"Hello {name}")

greeter(Guido) #1

greeter(Luciano) #2

greeter(Kushal) #3

"""

Output:

Hello Guido

Hello Luciano

Hello Kushal

"""

Here the function greeter() greets the person who's name is passed through it. But it does it sequentially i.e when greeter(Guido) will run the whole program will block it's state unless the function executes successfully or not. If it runs successfully then only the second and third function calls will be made.

This familiar style of programming is called sequential programming.

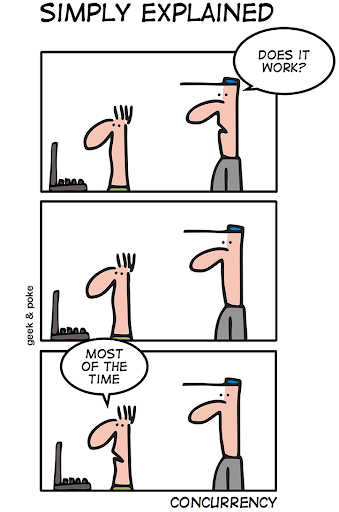

Sequential programming is comparatively easy to understand and most of the time fit the use case. But sometimes you need to get most out of your system for any X reason, the most common substituent of X, I could find is scaling your application.

Though greeter() is just a toy example but a real-world application with real user need to work the same even on huge amount of traffic it receives. Every time you get that spike in your traffic/daily active user you can't just add more hardware so one of the best solutions at times is to utilize your current system to the fullest. Thus Concurrency comes into the picture.

Concurrency is about dealing with lots of things at once. – Rob Pike

Before I move forward, I know what most of the people will say. If it's that important why at work/college/park/metro station/.. people are not talking about it? Why most of the people still use sequential programming patterns while coding?

Because of a very simple reason, it's not easy to wrap your head around and it's very easy to write sequential code pretending to be concurrent code.

I got to know about this programming style very late and later when I talked to people they said the same thing. It's not easy to code, you can easily skip the best practices and very hard to debug so most of the people try to stick to the normal style of programming.

The two most popular ways(techniques) of dealing with concurrency in Python is through:

Threading: Python has a threading module that helps in writing multi-threaded code. You can spawn independent threads share common states (just like a common variable that is accessed by two independent threads).

Let's re-write that greeter() function again now with threads.

import threading

import time

def main():

thread1 = threading.Thread(target=greeter, args=('Guido',))

thread2 = threading.Thread(target=greeter, args=('Luciano',))

thread3 = threading.Thread(target=greeter, args=('Kushal',))

thread1.start()

thread2.start()

thread3.start()

def greeter(name):

print("Hello {}".format(name))

time.sleep(1)

if __name__ == '__main__':

main()

"""

Output:

Hello Guido

Hello Luciano

Hello Kushal

"""

Here thread1, thread2, thread3 are three independent threads that run alongside main thread of the interpreter. This may look it is running in parallel but it's not. Whenever the thread waits(here it's a simple function so you might see that), this wait can be anything reading from a socket, writing to a socket, reading from a Database. Its control is passed on to the other thread in the queue. In threading, this switching is done by the operating system(preemptive multitasking).

Though threads seem to be a good way to write multithreaded code it does have some problems too.

Asyncio: Python introduced asyncio package in 3.4, which followed a different approach of doing concurrency. It brought up the concept of coroutines. A coroutine is a restartable function that can be awaited(paused) and restarted at any given point. Unlike threads, the user decides which coroutine should be executed next. Thus this became cooperative multitasking.

Asyncio brought new keywords like async and await. A coroutine is defined with the async keyword and is awaited so that the waiting time can be utilized by the other coroutine.

Let's rewrite the greeter() again but now using the Asyncio.

import asyncio

async def greeter(name):

await asyncio.sleep(1)

print(f'Hello {name}')

def main():

loop = asyncio.get_event_loop()

task1 = loop.create_task(greeter('Guido'))

task2 = loop.create_task(greeter('Luciano'))

task3 = loop.create_task(greeter('Kushal'))

final_task = asyncio.gather(task1, task2, task3)

loop.run_until_complete(final_task)

if __name__ == '__main__':

main()

"""

Output:

Hello Guido

Hello Luciano

Hello Kushal

"""

Looking at the above code we see some of the not so common jargons thrown around, event loop, tasks, and a new sleep function. Let's understand them before we dissect the code and understand it's working.

greeter() is a coroutine which prints the greeting, though this is a simple example but in an I/0 bound process a coroutine needs to wait so await helps the program to wait and get off the event loop. The async.sleep() function is different from the time.sleep() because async.sleep() is a non blocking call i.e it does not hold the program until the execution is completed. The argument given to the async.sleep() is the at the most value of the wait.Now let's move on to the code. Here task1,task2 and task3 work concurrently calling the coroutine. Once all the tasked are gathered the event loop runs until all the tasks are completed.

I hope this gives you a brief overview of Concurrency, we would be diving deep into both threading and asyncio and how can we use async for web applications using aiohttp and quart.

Stay tuned this will be a multi-part series.

While reading about concurrency you might a lot of other topics that you might confuse concurrency with so let's look at them now just so we know how is concurrency different.

Concurrency is about dealing with lots of things at once. Parallelism is about doing lots of things at once. Not the same, but related. One is about structure, one is about execution. Concurrency provides a way to structure a solution to solve a problem that may (but not necessarily) be parallelizable. -Rob Pike

Parallesim: doing tasks simultaneously, this is different from concurrency as in parallelism all the tasks run side by side without waiting(sleep) for other tasks, unlike a concurrent task. The method to achieve is called multiprocessing. Multiprocessing is well suited for CPU bound tasks as it distributes tasks over different cores of the CPU. Sadly Python's GIL doesn't do go well with CPU bound tasks.

Single-Threaded/Multi-Threaded: Python is a single-threaded language because of the Python's GIL but you can use multiple threads. These threads run along with the main thread. So threading, in general, is the method to achieve concurrency.

Asynchronous:, asynchrony is used to present the idea of either concurrent or parallel task and when we talk about asynchronous execution the tasks can correspond to different threads, processes or even servers.

Part 2: Talking Concurrency: Asyncio

#Did you know? We can assign multiple assignments in a single line.

For example:a,b = 10,20

a=10

b=20

You can use this method for swapping two numbers.

a,b=b,a

a=20

b=10

To understand how it works, we need to understand a datatype called tuple. We use a comma, to create the tuple; in the right-hand side we create the tuple(called tuple packing) and in the left-hand side we do tuple unpacking into a new tuple.

Let us take an example tp understnd more clearly.

data=("Darshna Das, West Bengal, Python")

name, state, language=data

name=Darshna Das

state=West Bengal

language=Python

Formatting a string Let us now see different methods to format string. .format method

name="Darshna"

language="Python"

msg="{0} loves {1}". .format(name,language)

print(msg)

Darshna loves Python.

Interesting fact In Python 3.6 a new way to do string formatting introduces a new concept called f-string.

name=Darshna

lanuguage=Python

msg=f"{name} loves {language}

print(msg)

Darshna loves Python.

f-strings provide a simple and readable way to embed Python expressions in a string.

I recently started reading the pym book suggested by folks at #dgplug. Since I have been programming in Python since an year and a half, I could go through the basics fairly quick. Here are the topics I covered:

However, file handling is something I have rarely used till now. This blog talks about the it and some of the great takeaways.

A file can be opened in three modes: ### Read: Opens the file in read-only mode. The file cannot be edited or added content to. The syntax for the same is :

>>> f = open('requirements.txt' , 'r')

### Write: Opens the file in write, you can make desired changes to the file. The syntax for the same is:

>>> f = open('requirements.txt' , 'r')

### Append: Opens file in append mode. You can append further content, but cannot change or modify past content. The syntax for the same is:

>>> f = open('requirements.txt' , 'a')

When a file is openened in read mode, the file pointer is at the beginning of the file. There are different functions for reading the file:

It reads the entire file at once. The file pointer traverses the entire file on calling this function. Therefore, calling this function again will have no effect, since the file pointer is already at EOF. Syntax for the same is:

>>> f.read()

'selenium >= 3.141.0\npython-telegram-bot >= 11.1.0\ndatetime >= 4.3\nargparse >= 1.4.0\nwebdriver-manager >= 1.7\nplaysound >= 1.2.2'

This function moves the file pointer to the beginning of the next line hence outputting one line at a time. Syntax for readline() function is :

>>> f.readline()

'selenium >= 3.141.0\n'

>>> f.readline()

'python-telegram-bot >= 11.1.0\n'

Reads all the lines in a file and returens a list.

>>> f.readlines()

['selenium >= 3.141.0\n', 'python-telegram-bot >= 11.1.0\n', 'datetime >= 4.3\n', 'argparse >= 1.4.0\n', 'webdriver-manager >= 1.7\n', 'playsound >= 1.2.2']

Now, we should always close a file we opened when not in use. Not closing it increases memory usage and degrades the quality of code. Python offers nice functionality to take care of file closing by itself:

`with keyword can be used as follows:

>>> with open('requirements.txt' , 'r') as f:

... f.read()

...

'selenium >= 3.141.0\npython-telegram-bot >= 11.1.0\ndatetime >= 4.3\nargparse >= 1.4.0\nwebdriver-manager >= 1.7\nplaysound >= 1.2.2'

The .write() function can be easily used to write into a file. This will place the file pointer to the beginning and over-write the file completely. Here's how that works:

>>> f = open('requirements.txt' , 'w')

>>> f.write('tgbot\n')

6

The return value '6' denotes the number of characters written into the file

Hope you enjoyed reading the blog, :)

In continuation with the last blog, we now proceed with the following topic.

Variables and Datatype Following identifiers are used as reserved words, or keywords of the language, and cannot be used as ordinary identifiers. They must be typed exactly as written here:

false class finally

is return

none continue for

lambda try

true def from

non-local while

and del global

not with

as elif if

or yield

assert else import

pass

break except in

raise

In Python, we don't specify what kind of data we are going to put in a variable. So, we can directly write abc=1 and abc will become integer datatype.

If we write abc= 1.0 abc will become of floating type.

Eg:-

a= 13

b=23

a+b

36

From the given example we understand that to declare a variable in Python we just need to type the name and the value. Python can also manipulate strings, they can be enclosed in single quotes or double-quotes.

Reading input from keyboard Generally, the real-life Python codes do not need to read input from the keyboard. In Python, we use an input function to do input(“string to show”); this will return a string as output.

Let us write a program to read a number from the keyboard and check if it is less than 100 or not.

testhundred.py

#!/usr/bin/env python3

number=int(input("Enter an integer:"))

if number<100:

print("Your no. is smaller than 100")

else:

print("Your number is greater than 100")

output:

$ ./testhundered.py

Enter an integer: 13

Your number is smaller than 100.

$ ./testhunderd.py

Enter an integer: 123

Your number is greater than 100.

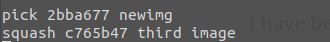

Contributing to open source is one of the best ways to hone up programming skills. Along with writing quality code, using a version control tool plays a crucial role while contributing. There are a lot of source control management platforms such as github , gitlab , phabricator etc. This blog discusses about making code contributions via github.

Pull request, as the name suggests is a patch of code that is sent to original code base to be merged into the source code after review. Usually, maintainers of the project will review the PR(pull request) and merge it into original code base if everything looks okay.

First off, there should be a fork of the upstream repository. Fork is nothing but a copy of the upstream repository onto your own github. This is where you will be pushing your changes. (Since you own it :D)

Then, clone of repository so as to do the changes locally and testing them before sending a patch. This should be fairly simple using

git clone <repository URL>

Now, if we want to make a change to the source code, we should always ensure that the master/development branch to be always in sync with upstream. You would definitely not like messing the master branch , and if in case the issue's priority is not high, the PR will be pending with the changes in master branch.

Other than that, the master branch is 'supposed' to have the updated code(or the production code), the rest of the features are supposed to be done on separate branches before being pushed into production.

Thus, it it is always a good practice to make new branches for each pull request to be opened. To do this use

git checkout -b <branch_name> -t upstream/master

This will make the branch in sync with the upstream. If upstream is not added, you can manually add it to remote using

git remote add upstream <upstream_URL>

Or alternatively, you can just create a branch and fetch from upstream using:

git checkout -b <branch_name>

git fetch upstream

make the required changes and commit them via git add and git commit commands

After the changes are done, push to your code via

git push origin <branch_name>

Usually, just after pushing to github, you would button when you open your repository(on github) clicking on which a PR will be made. It should look something as follows:

If that doesn't show automatically, navigate to the branch(on github) and make a PR.

That is it! Now keeps doing the requested changes(if asked) locally and keep pushing code on the created branch till the point it is fit for merging.

Recently I was browsing through git add’s manual to find out how to split hunks and stage them.

I found that there is an option called --intent-to-add which is pretty useful.

Like it says, one can use it to add an untracked file to git’s index but not stage the file.

An untracked file doesn’t show up in diffs and cannot be staged by hunks since nothing is in the index yet. So by adding it to the index, we just track it and can stage when ready.

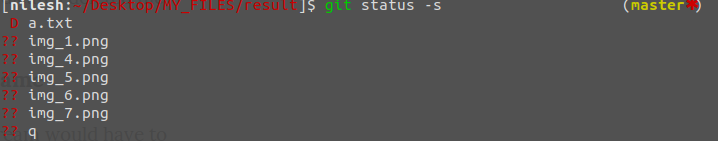

This blog is in a git repo, so lets track the current post. When the file is not yet tracked, we see

$ git status

On branch master

Your branch is up to date with 'origin/master'.

Untracked files:

(use "git add <file>..." to include in what will be committed)

content/post/shorts/TIL Use git to add untracked file to index.mdNow when you add it to the index,

$ git add --intent-to-add content/post/shorts/TIL\ Use\ git\ to\ add\ untracked\ file\ to\ index.md

$ git status

On branch master

Your branch is up to date with 'origin/master'.

Changes not staged for commit:

(use "git add <file>..." to update what will be committed)

(use "git checkout -- <file>..." to discard changes in working directory)

new file: content/post/shorts/TIL Use git to add untracked file to index.md

no changes added to commit (use "git add" and/or "git commit -a")With this you can look at a proper output of git diff,

diff --git a/content/post/shorts/TIL Use git to add untracked file to index.md b/content/post/shorts/TIL Use git to add untracked file to index.md

new file mode 100644

index 0000000..ff5d586

--- /dev/null

+++ b/content/post/shorts/TIL Use git to add untracked file to index.md

@@ -0,0 +1,45 @@

+---

+title: "TIL Git: Add Untracked File to Index"

+date: 2019-08-14T16:10:24+02:00

+tags:

+ - git

+categories:

+ - dgplug

+---

+Recently I was browsing through `git add`'s manual to find out how to split hunks and stage them.

+I found that there is an option called `--intent-to-add` which is pretty useful.

+Like it says, one can use it to add an untracked file to git's index but not stage the file.

+

+# Why is it useful?

+An untracked file doesn't show up in diffs and cannot be staged by hunks since nothing is in the index yet.

+So by adding it to the index, we just track it and can stage when ready.

+

+# Example

+This blog is in a git repo, so lets track the current post.

+When the file is not yet tracked, we see

+```git

+$ git status

+On branch master

+Your branch is up to date with 'origin/master'.

+

+Untracked files:

+ (use "git add <file>..." to include in what will be committed)

+

+ content/post/shorts/TIL Use git to add untracked file to index.md

+```

+

+Now to when you add it to the index,

+```git

+$ git add --intent-to-add content/post/shorts/TIL\ Use\ git\ to\ add\ untracked\ file\ to\ index.md

+$ git status

+On branch master

+Your branch is up to date with 'origin/master'.

+

+Changes not staged for commit:

+ (use "git add <file>..." to update what will be committed)

+ (use "git checkout -- <file>..." to discard changes in working directory)

+

+ new file: content/post/shorts/TIL Use git to add untracked file to index.md

+

+no changes added to commit (use "git add" and/or "git commit -a")

+```

I guess this has gone enough meta so lets wrap it up. Enjoy and explore git, you will always find something new :)

by Jagannathan Tiruvallur Eachambadi (jagannathante@gmail.com) at August 14, 2019 02:10 PM

wotw, xenophobia

means the fear of things changing. the ability to not see the other side in an open hearted manner. causing one to become close minded and intolerant

and if you want to know if Nujeen made it?

After resuming my study I've learned about File handling (I can recall file handling in C).

File handling Python gives us an easy way to manipulate files. We can divide files in two parts, one is test file which contain simple text, and another one is binary file which contain binary data which is only readable by computer.

File opening The key function for working with files in Python is the open() function. The open() function takes two parameters; filename, and mode. There are four different methods (modes) for opening a file:

"r" - Read - Default value. Opens a file for reading, error if the file does not exist

"w" - Write - Opens a file for writing, creates the file if it does not exist

"a" - Append - Opens a file for appending, creates the file if it does not exist

"x" - Create - Creates the specified file, returns an error if the file exists

Creating a file To create a new empty file:

>>> f = open("file.txt", "x")

To Create a new file if it does not exist:

>>> f = open("file.txt", "w")

Opening a file To open a file we use open() function. It requires two arguments, first the file path or file name, second which mode it should open. If we don't mention any mode then it will open the file as read only.

>>> f = open ("file.txt")

>>> f

<_io.TextIOWrapper name='file.txt' mode='r' encoding='UTF-8'>

Closing a file After opening a file one should always close the opened file. We use method close() for this.

>>> f = open ("file.txt")

>>> f

<_io.TextIOWrapper name='file.txt' mode='r' encoding='UTF-8'>

>>> f.close()

Reading a file To read the whole file at once use the read() method.

>>> f = open("sample.txt")

>>> f.read()

'I am Rayan\nI live in Bangalore\nI am from West Bengal\n'

If we call read() again it will return empty string as it already read the whole file. readline() can help you to read one line each time from the file.

>>> f = open("sample.txt")

>>> f.readline()

'I am Rayan\n'

>>> f.readline()

'I live in Bangalore\n'

To read all the lines in a list we use readlines() method.

>>> f = open("sample.txt")

>>> f.readlines()

['I am Rayan\n', 'I live in Bangalore\n', 'I am from West Bengal\n']

We can loop through the lines in a file object.

>>> f = open("sample.txt")

>>> for x in f:

... print(x, end=' ')

...

I am Rayan

I live in Bangalore

I am from West Bengal

Example:

>>> f = open("sample.txt", "w")

>>> f.write("I am Rayan\nI live in Bangalore\nI am from West Bengal")

>>> f.close()

>>> f = open("sample.txt", "r")

>>> print(f.read())

I am Rayan

I live in Bangalore

I am from West Bengal

Using the with statement (which I found so cool) In real life scenarios we should try to use with statement. It will take care of closing the file for us.

This is the continuation of the Python learning series from the book Python for you and me.

Modules – These are Python files that contain different function definitions or variables that can be reused. These files are always ended with the .py extension.

For writing any language you need an editor, similarly here we use mu editor which was developed by Nicholas Tollervey. For installation, the total download size is around 150MB. We can open the editor from the command line type mu in the terminal. Give the following command line, type mu in the terminal

python3 -m mu

Executing Code – Write your code you want to execute and save it. – Use a proper file name. ( end it with .py) – Click on the run button to execute the code. – If you want to a REPL, then click REPL button.

NOTE: Do not give any unnecessary space in the file name, use _ if required, for an example download_photos.py

Today I read about few things those are listed below:

Data Structures in Python Data structures is a way to store and organize data. Obviously, some data structures are good in one set of problems, but terrible in other ones. The right choice of data structure can make your code faster, more efficient with memory and even more readable for other human beings. Python has few in-built data structures.

Lists List is a sequence of elements. It can store anything: numbers, strings, other lists, functions and etc. The fact that it can store anything is great but it has a disadvantage. This kind of list will require more memory. Let’s take a look at a basic example of list:

# create list

>>> list= ['Noname', 'Rayan', 'xyz', 100, 42, 55]

>>> list

['Noname', 'Rayan', 'xyz', 100, 42, 55]

# check if element is in list

>>> 42 in list

True

Tuples Another way to store a sequence of elements is to use tuples. Tuple is basically the same thing as list but with one difference. You can’t add or remove elements from tuples after initialization. It’s immutable data structure.

>>> a = ('Noname', 'Rayan', 'xyz', 100, 42, 55)

a

>>> ('Noname', 'Rayan', 'xyz', 100, 42, 55)

Dictionary nother important data structure is dictionary. The difference between dictionary and list is that you access elements in dictionary by key, not by index.

>>> dict = {'Rayan': 'Das','Kushal': 'Das','Sayan': 'Chowdhury'}

>>> dict

{'Rayan': 'Das', 'Kushal': 'Das', 'Sayan': 'Chowdhury'}

Sets Set stores only unique elements.

>>> letters = {'a', 'b', 'c'}

>>> 'c' in letters

True

>>> letters.add('d')

>>> letters

{'c', 'b', 'd', 'a'}

Strings In Python we declare strings in between “” or ‘’ or ‘’’ ‘’’ or “”” “”“ There are different methods available for strings.

Functions A function is a block of code which only runs when it is called. You can pass data, known as parameters, into a function. A function can return data as a result.

Defining a function This way we can define a function.

>>> def func(params):

... statement1

... statement2

Calling a function

>>> def func():

... print("hello from func")

...

>>> func()

hello from func

Local and Global variables

Keyward only arguments We can also mark the arguments of function as keyword only. That way while calling the function, the user will be forced to use correct keyword for each parameter.

Docstrings We use docstrings in Python to explain how to use the code, it will be useful in interactive mode and to create auto-documentation.

Got to know about Higher-order function. It does at least one of the following step inside: -Takes one or more functions as argument. -Returns another function as output.

Map function map is a very useful higher order function in Python. It takes one function and an iterator as input and then applies the function on each value of the iterator and returns a list of results.

I've started Python again from Pym book by Kushal

Read about following topic and solved few basic problems.

I've paused here. Cleared all my basics again till now. Basics are the key ingredients in long run I believe.

I have just started with Python, from the book Python For you and me!

Some basic guidelines I have jotted down. The first program to print the “Hello World !”

print ("Hello World!") = Hello World !

While writing this above code, we write it into a source file. We can use any text editor. For example:

#!/usr/bin/env python

print ("Hello World!")

On the first line, we use (#!), which is called she-bang. In python, this indicates that it should be running the code. The lines of text in python is called “strings”.

Whitespace at the beginning of a line is known as indentation, but if we use the wrong indentation it shows an error.

Basic Rules For Indentation!